3D at the edge

What is edge computing?

For many internet of things (IoT) applications, a large amount of data can be generated during the course of operation – data that needs to be processed and analyzed as well as acted upon. Edge computing refers to a distributed system where that data is handled close to the edge of the system, where the devices creating the data are located, rather than transferring and processing the data in a central or cloud location. Processing data closer to the sensors means improved speed and lower latency, as well as cost savings since the bulk of the information doesn’t need to travel to a distant server for response. The efficiency of edge computing is especially crucial for consumer applications like home health monitoring, connected fitness or home robotics – home fitness equipment that uses all the available internet bandwidth to monitor your progress would not be appealing to consumers.

Depth at the edge

There are many applications where depth makes a difference in IoT applications – for example, in home health care. Take fall detection as an example; to monitor something like body pose, depth cameras are crucial. Pose or skeletal tracking applications generally operate by running machine learning models that have been trained to recognize things like limb position and joint locations. Running those models constantly in real time in the cloud not only would take bandwidth most consumers wouldn’t want to be utilizing, but would operate much slower than something running onsite. Being able to flag a fall when it happens is a time critical application – not to mention the risk of internet outage or bandwidth issues preventing it from being accurately detected. For those and similar reasons, being able to support real time processing of depth data at the edge is of obvious benefit.

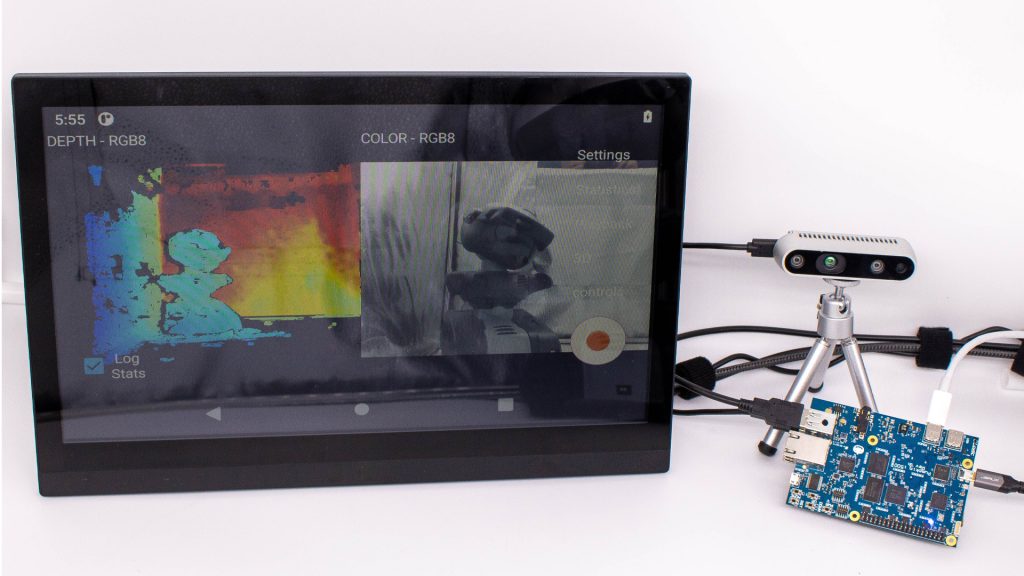

Intel RealSense Depth Camera D435 and Pumpkin i500

The market is making a shift from AI and machine learning applications in the cloud to out on the edge. This allows for smart devices with built-in intelligence to thrive in low-bandwidth environments where only meta-data is being sent to the cloud. Depth and 3D applications in robotics and consumer electronic markets are just coming-of-age on the edge.

Pumpkin i500

The Pumpkin i500 EVK is designed by OLogic and powered by MediaTek AIot i500 . It is an SBC that is built to support computer vision and AI Edge Computing. Session Border controllers (or SBCs) securely transfer data between private and public networks – for example, in IoT applications. Designed to provide the opportunity to do 3D sensing in AI accelerated IoT applications at the edge for the AIoT market, the Pumpkin i500 combined with Intel RealSense depth cameras jump-starts the development of products with display and camera capabilities. Currently Intel Realsense technologies are the only depth camera solutions tested and proven to work with the Pumpkin i500. Some applications that need Pumpkin i500 and Intel RealSense are consumer products in connected fitness, home health monitoring, home robotics, and connected pet applications. In all these use cases items like body pose, gesture recognition, or hand tracking are important. Depth technology allows for these types of applications to go beyond simple 2D vision and machine learning applications by implementing 3D machine learning algorithms out on the edge.

Pumpkin i500 and Intel RealSense Depth camera D435

The IoT applications of the future are ones where 3D data is being processed by AI applications out at the edge of the network. Today, many of these applications are being done with only 2D images or video. With the explosive adoption of neural processors designed for edge computing utilizing ONNX (https://onnx.ai/) it is only a matter of time until 3D is pervasive over 2D. Without depth perception, these new models of the future will not be possible. Other interesting use cases include helping to enforce social distancing, mask wearing, or touchless interfaces.

Subscribe here to get blog and news updates.

You may also be interested in

“Intel RealSense acts as the eyes of the system, feeding real-world data to the AI brain that powers the MR

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens