Beginner’s guide to depth (Updated)

We talk a lot about depth technologies on the Intel® RealSense™ blog, for what should be fairly obvious reasons, but often with the assumption that the readers here know what depth cameras are, understand some of the differences between types, or have some idea of what it’s possible to do with a depth camera. This post assumes the opposite – that you know nothing and are brand new to depth. In this post, we will cover a variety of types of depth cameras, and why the differences are important, what depth cameras are, how you might get started and more.

What are depth cameras?

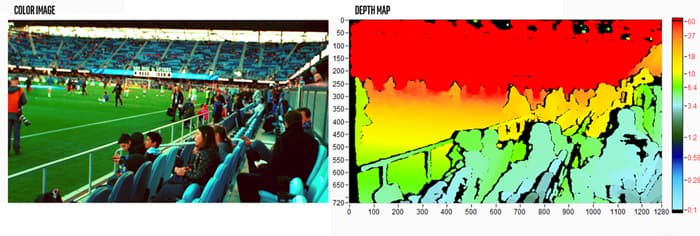

Standard digital cameras output images as a 2D grid of pixels. Each pixel has values associated with it – usually we think of those as Red, Green and Blue, or RGB. Each attribute has a number from 0 to 255, so black, for example, is (0,0,0) and a pure bright red would be (255,0,0). Thousands to millions of pixels together create the kind of photographs we are all very familiar with. A depth camera on the other hand, has pixels which have a different numerical value associated with them, that number being the distance from the camera, or “depth.” Some depth cameras have both an RGB and a depth system, which can give pixels with all four values, or RGBD.

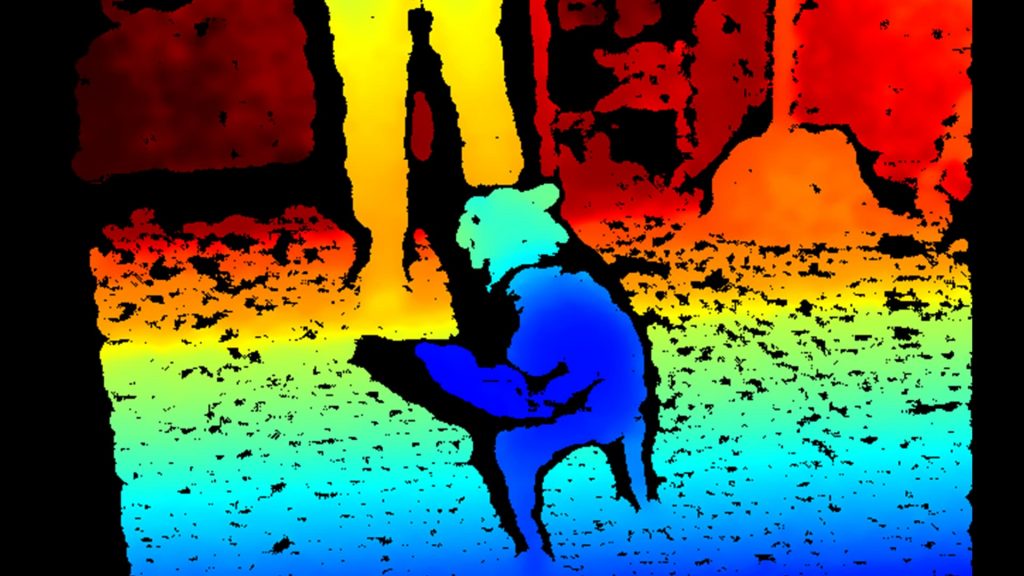

The output from a depth camera can be displayed in a variety of ways – in the example below, the color image is shown side by side with the depth image, where each different color in the depth map represents a different distance from the camera. In this case, cyan is closest to the camera, and red is furthest.

It doesn’t really matter what color values the depth map uses, this is just displayed in this way to make it easy to visualize.

Types of depth camera

There are a variety of different methods for calculating depth, all with different strengths and weaknesses and optimal operating conditions. Which one you pick will almost certainly depend on what you are trying to build – how far does it need to see? What sort of accuracy do you need? Does it need to operate outdoors? Here’s a quick breakdown of camera types and roughly how each one works.

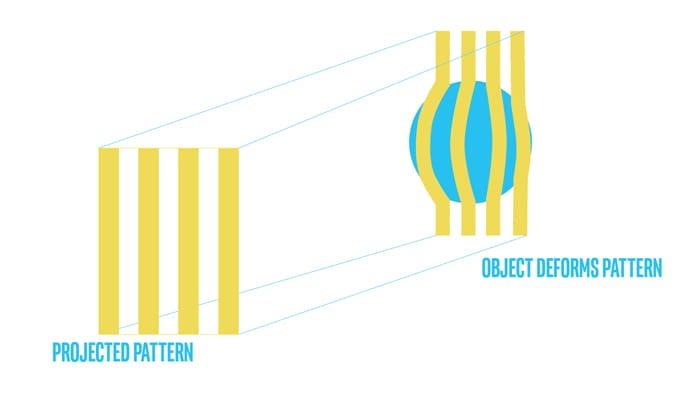

Structured Light and Coded Light

Structured light and coded light depth cameras are not identical but similar technologies. They rely on projecting light (usually infrared light) from some kind of emitter onto the scene. The projected light is patterned, either visually or over time, or some combination of the two. Because the projected pattern is known, how the sensor in the camera sees the pattern in the scene provides the depth information. For example, if the pattern is a series of stripes projected onto a ball, the stripes would deform and bend around the surface of the ball in a specific way.

If the ball moves closer to the emitter, the pattern would change too. Using the disparity between an expected image and the actual image viewed by the camera, distance from the camera can be calculated for every pixel. The Intel® RealSense™ SR300 line of devices are coded light cameras. Because this technology relies on accurately seeing a projected pattern of light, coded and structured light cameras do best indoors at relatively short ranges (depending on the power of the light emitted from the camera). Another issue with systems like this is that they are vulnerable to other noise in the environment from other cameras or devices emitting infrared. Ideal uses for coded light cameras are things like gesture recognition or background segmentation (also known as virtual green screen).

The new Intel® RealSense™ Depth Camera SR305 is a coded light depth camera and is a great place for people who are beginners to depth to start experimenting with depth development. As a short range indoor camera it is the perfect low risk place to get started. Since it uses the Intel® RealSense™ SDK 2.0, any code you write or anything you develop with this camera will also work with all the other depth cameras in the Intel RealSense product lines, enabling you to upgrade from short range indoor to longer range, higher resolution or outdoor cameras.

Learn more Buy SR300

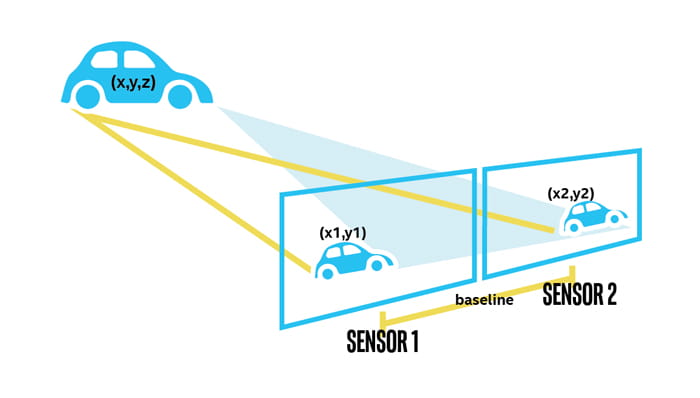

Stereo Depth

Stereo depth cameras also often project infrared light onto a scene to improve the accuracy of the data, but unlike coded or structured light cameras, stereo cameras can use any light to measure depth. For a stereo camera, all infrared noise is good noise. Stereo depth cameras have two sensors, spaced a small distance apart. A stereo camera takes the two images from these two sensors and compares them. Since the distance between the sensors is known, these comparisons give depth information. Stereo cameras work in a similar way to how we use two eyes for depth perception. Our brains calculate the difference between each eye. Objects closer to us will appear to move significantly from eye to eye (or sensor to sensor), where an object in the far distance would appear to move very little.

Because stereo cameras use any visual features to measure depth, they will work well in most lighting conditions including outdoors. The addition of an infrared projector means that in low lighting conditions, the camera can still perceive depth details. The Intel® RealSense™ D400 series cameras are stereo depth cameras. The other benefit of this type of depth camera is that there are no limits to how many you can use in a particular space – the cameras don’t interfere with each other in the same way that a coded light or time of flight camera would.

The distance these cameras can measure is directly related to how far apart the two sensors are – the wider the baseline is, the further the camera can see. In fact, astronomers use a very similar technique to measure the distance of faraway stars, by measuring the position of a star in the sky at one point in time, and then measuring that same star six months later when the earth is at the furthest point in its orbit from the original measuring point. In this way, they can calculate the distance (or depth of the star) using a baseline of around 300 million kilometers.

Time of Flight and LiDAR

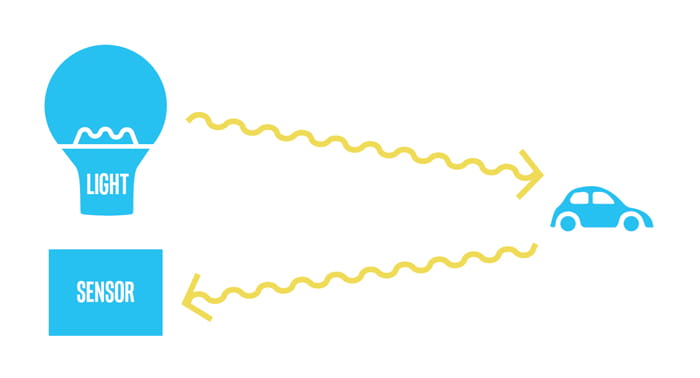

Each kind of depth camera relies on known information in order to extrapolate depth. For example, in stereo, the distance between sensors is known. In coded light and structured light, the pattern of light is known. In the case of time of flight, the speed of light is the known variable used to calculate depth. LiDAR sensors, which you may be familiar with from things like self-driving cars are a type of time of flight camera which use laser light to calculate depth. All types of time of flight device emit some kind of light, sweep it over the scene, and then time how long that light takes to get back to a sensor on the camera. Depending on the power and wavelength of the light, time of flight sensors can measure depth at significant distances – for example, being used to map terrain from a helicopter.

The primary disadvantage of time of flight cameras is that they can be susceptible to other cameras in the same space and can also function less well in outdoor conditions. Any situation where the light hitting the sensor may not have been the light emitted from the specific camera but could have come from some other source like the sun or another camera can degrade the quality of the depth image.

The new Intel® RealSense™ LiDAR Camera L515 is a new type of time-of-flight or LiDAR based camera. While most types of LiDAR devices have mechanical systems which spin around to sweep the environment with light which is then detected, the L515 uses a proprietary miniaturized scanning technology. This technology allows the L515 to be the world’s smallest high resolution LiDAR depth camera.

What can you do with depth?

All depth cameras give you the advantage of additional understanding about a scene, and more, it gives any device or system the ability to understand a scene in ways that don’t require human intervention. While it’s possible for a computer to understand a 2d image, that requires significant investment and time in training a machine learning network (for more information on this topic you can check out this post). A depth camera inherently gives some information without the need for training, for example, it’s easier to distinguish foreground and background objects from a scene. This becomes useful in things like background segmentation – a depth camera can remove background objects from an image, allowing a green-screen free capture.

Depth cameras are also very useful in the field of robotics and autonomous devices like drones – if you have a robot or drone navigating its way around a space, you would probably want to automatically detect if something appears directly in front of the robot, to avoid collision. In this video that’s exactly the use that the depth camera serves, in addition to making a 3d map or scan of the space.

These are just a few of the use cases for depth cameras, there are many more. What can you do with the added understanding of physical space for a computer or device?

Subscribe here to get blog and news updates.

You may also be interested in

“Intel RealSense acts as the eyes of the system, feeding real-world data to the AI brain that powers the MR

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens