Building an AI-powered robotic arm assistant using D435

The Tabletop HandyBot is an AI-powered robotic arm assistant that carries out voice commands to bridge the digital and physical worlds.

Chatbots powered by modern large language models (LLMs) have opened many people’s eyes to the possibility of machines handling life’s mundane tasks for us. With their advanced capabilities, these chatbots can automate a wide range of tasks, from scheduling appointments and answering frequently asked questions to providing customer support and generating text summaries. This has not only freed up human time and resources but also enabled businesses to operate more efficiently and effectively.

But one thing that all of these tasks have in common is that they are entirely virtual. Sure, a chatbot can give you instructions on how to make dinner, but it cannot go any further. Engineer and roboticist Yifei Cheng has recently built a robot that can help to bridge the gap between the digital and physical worlds. Called the Tabletop HandyBot, Cheng’s robot arm leverages AI, such as LLMs, to interpret a user’s voice commands and devise a set of steps to carry them out in the real world. It is billed as a low-cost AI-powered robotic arm assistant — but before you get too excited, keep in mind that robotics can be a very expensive pastime. In this case, low-cost means about $2,300 in parts.

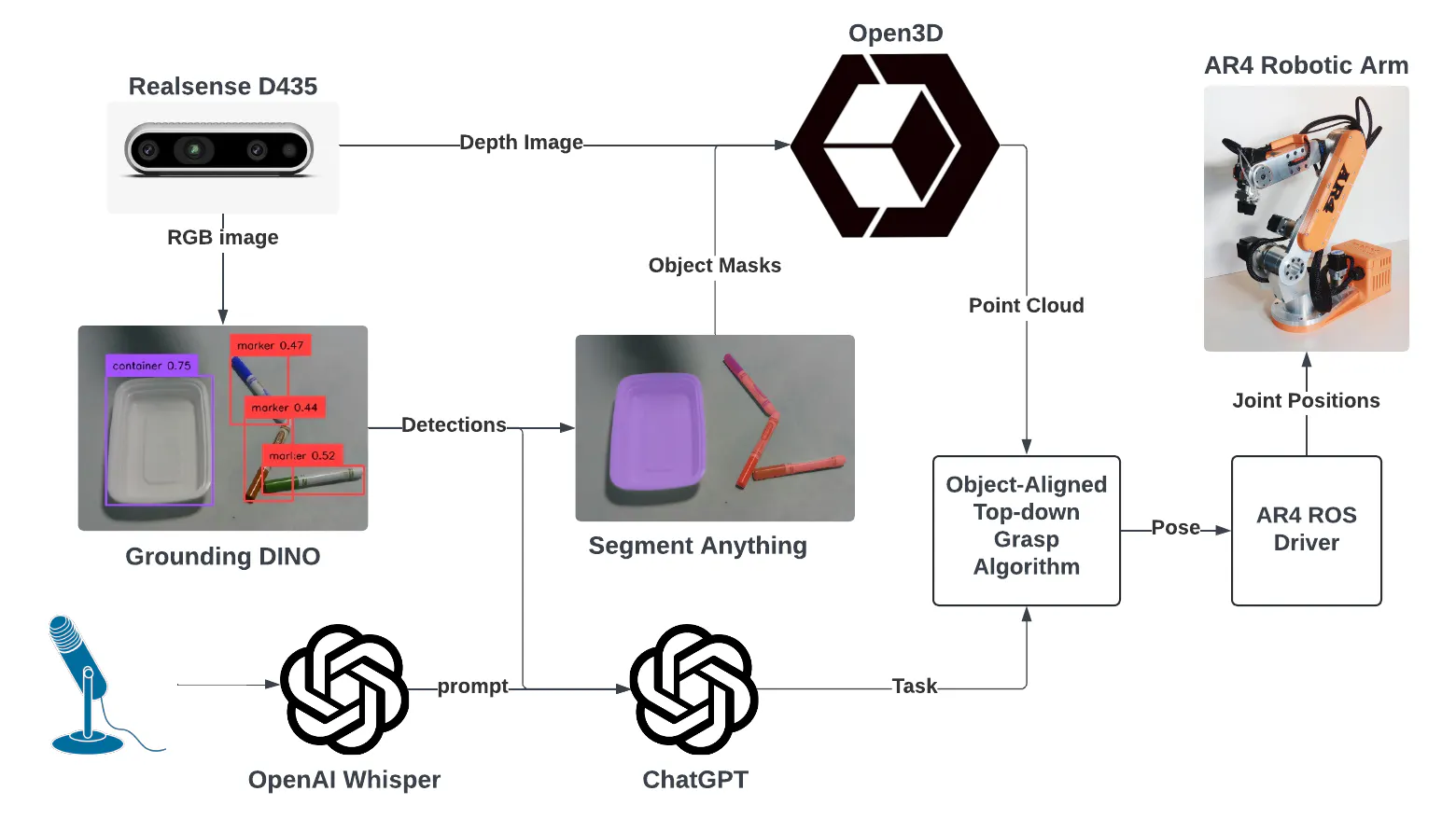

The system architecture (credits: Yifei Cheng)

At present, the Tabletop HandyBot is capable of listening for commands, then picking up a nearby object and moving it to another location, as directed. This is a very generic capability that could be used in a number of applications, so it is a nice starting point. Given the tech stack, this robot could be taught to do far more in the future, however.

On the hardware side, Tabletop HandyBot consists of an off-the-shelf Annin Robotics AR4 6-DOF desktop size industrial robot arm. This is paired with an Intel Realsense D435 depth camera to capture data about the robot’s environment. Finally, a microphone is utilized to capture the audio of voice commands.

Voice commands are processed by OpenAI’s Whisper algorithm to convert them to text, before they are forwarded into ChatGPT as a prompt. Depth images are processed by the Grounding DINO object detection algorithm, and that information is also fed into ChatGPT. The detected objects are further processed by Segment Anything and Open3D before being passed into a grasping algorithm. The output from ChatGPT is also fed into that grasping algorithm, which works with the AR4 ROS driver to control the robot and carry out the user’s request.

In the project’s GitHub repository, Cheng goes into detail about the exact hardware and software that was used, and also gives step-by-step instructions to get a Tabletop HandyBot up and running. If you happen to be one of the few people that already has this hardware on hand, you should be able to get a working system up and running quickly. Or, if you have some other robotic arm at your disposal, even a much less capable one, you could take a look at the processing workflow and come up with a similar system of your own.

In any case, the demo video is well worth a watch. What would you do if you had a Tabletop HandyBot on your desk?

The Intel RealSense D435 Depth Camera plays a crucial role in the Tabletop HandyBot project, providing essential 3D perception capabilities. The camera delivers bright and clear RGB images, enabling effective detection and segmentation of objects on the tabletop. The provided calibration parameters help to compensate for any camera skew, thereby enhancing perception accuracy. The depth map generated by the camera is used to construct point clouds of objects within the scene, allowing for precise calculation of grasp points. The quality of the point clouds produced by the camera has been great. Additionally, the project seamlessly integrates with this camera through the ROS wrapper realsense-ros, which is very easy to install, works great out-of-the-box, and makes it easy to customize all kinds of configurations.

Subscribe here to get blog and news updates.

You may also be interested in

“Intel RealSense acts as the eyes of the system, feeding real-world data to the AI brain that powers the MR

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens