Wake: a guided experience in mixed reality

Guest blog by Anna Henson, annahenson.com

One of the most embodied and intimate experiences you can have with another person is to dance together.

If you’ve ever danced with another person, you know what this feels like. If you’re already comfortable with each other, or you’re a trained dancer, this can be smooth and relaxed. However, for a number of reasons – you don’t know the other person, or you don’t spend your days in improvisational or choreographed dance sessions with others – it can be awkward, even scary. Dancing together requires trust.

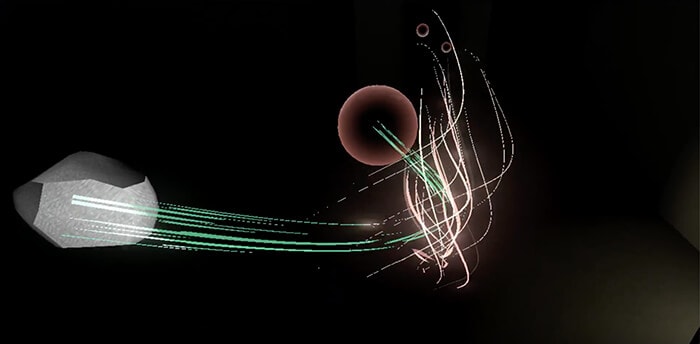

Now imagine that you’re wearing a bulky VR headset, with a long cable attached to you, and you’re in an unfamiliar virtual environment. You’re disoriented at first, but a voice outside the headset guides you through a slow breathing exercise and you begin to relax into the experience. You start to explore, learning how to move through this new territory as you go, and understanding what capabilities you have. Swirling pink and green lines move around you and you begin to play with them.

Then, a new presence appears in the midst of these abstractions. You’re not alone anymore – and this is not an avatar. It’s another person, another recognizable, individual person. They smile at you and slowly start to mirror your movements. Or maybe you’re mirroring theirs, it’s too hard to tell. Now the green lines are attached to you both (technically, to the position of a sensor attached to your wrist), strung in between you like a jump rope. It’s a bit awkward at first, but you test out the scenario by moving your arm as if you were actually holding one end of the jump rope. The dancer does the same. Soon, you play together as if you were on a sidewalk.

Then the dancer extends their hand. Hesitantly, you reach out and realize you can see another hand, too. You wiggle your fingers to see if the fingers in the headset will do the same. In a moment that is not quite conscious, you realize you’re seeing your own bare hand inside the headset. You look back to the dancer, reading their facial expressions and body language, inviting you. You make what feels like eye contact, then move towards them. Your hand meets theirs, brushing the tips of your fingers together. This is not a simulation.

Technology co-evolves with us. The researcher Paul Dourish articulated this in his crucial book, Where the Action Is: The Foundations of Embodied Interaction. Technology increasingly shapes how we spend our time, how we communicate, even how we position our bodies (think about how you’re physically positioned when you look at your phone). However, no matter how realistic, fantastical, or advanced technology gets, we can never escape the persistent physical and psycho-social world in which we live. Increasingly, with advances in XR, digital sensing, depth sensing and computer vision, we are able to communicate context to a computer about the physical world around it.

On a technical level, these capabilities are advancing quickly and being integrated into more devices that we are surrounded with on a daily basis. (Look at the smartphone that you’re likely reading this on right now. Do you see two cameras on the front or back? Have you ever used a face filter on an app? That’s computer vision in action.) However, on an interpersonal, emotional, psycho-social level, the questions of human representation and interaction within increasingly blurry boundaries between physical and digital experiences – implicated significantly in spatial computing – have not been questioned, researched, or publicly discussed at nearly the same level as the technical questions.

Shared space and communication in head-mounted immersive media (AR/VR/MR, or collectively “XR”) is a critical area of research in the development of these technologies. Most often, this “shared space” refers to the virtual environment, and assumes that users are physically distant (i.e. sharing a space only in the virtual world). However, what happens when we share physical space with others while in VR? If you’ve done VR before, chances are you were in the same room with others who were not in VR. Being blind to the changing physical and social circumstances around you while wearing a VR headset can be very disconcerting. How can we provide real world, and specifically interpersonal context to a VR user, and intentionally integrate this context into a shared experience with others? How can headset-wearers and non-headset wearers develop strategies to communicate, interpret each other’s intentions and emotions, and negotiate the sharing of objects both physical and virtual, as well as each other’s very real bodies? Looking ahead, how can we prototype and explore future-facing mixed and augmented reality experiences, without access to AR headsets or real time immersive telepresence (VR headset digitally removed)?

Wake

Wake is a mixed reality (MR) experience for one participant in-headset, facilitated and performed by two dancers. The experience builds multi-sensory integration through kinesthetic awareness methods, interaction with tactile materials, virtual objects, physical VR hardware, and co-located dancers. Beginning in a darkened virtual environment mapped to the physical environment, the participant’s ongoing interaction with virtual objects illuminates the virtual space. Spoken instructions and dialogue, kinesthetic exercises, and improvised movement facilitate the participant’s navigation of this space. A head-mounted streaming depth camera enables the participant to encounter a co-located and virtually-present dancer who invites the participant to join in simple collaborative movement.

Wake was created by artist and spatial computing researcher Anna Henson and the multidisciplinary performance duo slowdanger (Anna Thompson and Taylor Knight), with research assistance from Qianye Renee Mei and Char Stiles. The project was developed at Carnegie Mellon University, and supported in part by the Frank-Ratchye STUDIO for Creative Inquiry. This project continues Henson and slowdanger‘s collaborative research inquiry into embodied interaction, kinesthetic awareness in mediated experiences, trust and emotion within co-present head-mounted XR experiences, and the shifting boundaries between physical and digital.

Wake was developed as a tool to examine the affective, physiological, and interpersonal qualities of head-mounted XR experiences. We began with questions, some concerning the physical situation of the hardware as worn by a participant, some regarding common feelings of disorientation in VR, and others concerning the nature of social experience in which another person was both co-located and co-present. We approached these questions from different perspectives: my background as a designer and technologist, and slowdanger’s experience as dancers and movement researchers. Finding common ground in the ways we understood the human role in the intersection of art and technology, this interdisciplinary collaboration encouraged an expanded dialogue, pushing the boundaries of each of our expertise. We see XR, and specifically the blurred physical/digital boundaries of MR, as a human-machine interface which fundamentally implicates the perceptual and kinesthetic nature of the body and its attendant affective responses.

Wake is built in Unity 3D for the HTC Vive Pro, and Vive trackers worn on the participant’s and dancer’s wrists. An Intel® RealSense™ D415 depth camera is mounted on the front of the VR headset, at the approximate position of the participant’s eyes. Wake uses a custom algorithm to stream and render in real-time a high resolution RGB+depth video from the depth camera of a physically and virtually co-present dancer.

The first technical priority of the creation of Wake was to enable the dancer to be rendered photographically in real time in the headset. In order to do this, we needed to get a high fidelity stream of the Intel RealSense depth camera running at an acceptable frame rate for VR (90fps). In past projects, I had worked with varying ways of representing dancers’ bodies in VR, using abstract avatars, 3D scanned realistic representations of dancers rigged to marker-based motion capture data, and volumetric point cloud capture, which had provided the closest to lens-based (photography or video) representation of the dancers. However, none of these were able to be captured and rendered in real-time.

In Wake, I wanted to explore the effects of a co-located dancer streamed in real-time to a headset, represented as realistically as possible. This would allow for improvisation between the dancer and participant, and communication through physical movement and facial expressions, as well as the possibility for spoken communication and touch in combination with the visually mediated representation of the dancer in the virtual environment. In the early stages of the project, the Intel RealSense depth camera was newly available. This camera was just the right size to fit on the front of a VR headset, to become a POV (point of view) camera for the participant. Given a scenario similar to a webcam inside a laptop, Intel RealSense technology could enable remote encounters with other people in VR. However, in the context of Wake, we investigated the intersection of telepresence and tangible presence with participants who share physical space.

Eye contact is crucial to the interpretation of the intentions of others. The depth camera enabled this feature in a real time VR experience, in which previous experiences have been limited to animated avatars (where real eye contact is impossible). In the user study (N = 25) conducted on Wake, 24 out of 25 people reported that they felt they made direct eye contact with the dancer. In addition to being able to “see” the dancer when they enter your virtual space, because the video has depth data, and because we implemented a depth filtering process which creates a dynamic mask around the mesh of the dancer, they are able to appear as if they are believably inside your virtual space. The mesh is also affected by the lighting in the virtual environment, thus further immersing them in the virtual space.

In Wake, the co-located dancer was also tracked in the virtual environment using Vive trackers. This means that the co-located person can also be co-present in the virtual experience – i.e. they can participate with the person in-headset in shared virtual and physical tasks or experiences, bridging the gap between the physical and digital, and allowing for a liminal shared space to be revealed. From slowdanger’s perspective, “the particle system is my costume, or my fabric that I’m inside of that I move and stretch, and it’s not about me – the participant may not even know the swirling lines they’re seeing are being controlled by a person – they may think it’s a simulation. But when the camera reveals me, the situation becomes more vulnerable because now they can see me in my visible form. It becomes less about me moving to draw their attention to the particle system and more about making them feel like I’m right there with them.”

My research and practice find new ways for developing technologies to co-evolve with human needs for communication, shared experiences, sensory integration, and storytelling. Technology serves the communicative and experiential needs of the project. And when those needs require technologies that do not exist, they must be developed and tested in tandem with the experience as a whole. In the case of Wake, real-time telepresence and physical presence of a dancer within an environment which existed both tangibly and virtually required innovation in terms of a means of capturing and rendering the dancer. Developing a method for streaming and rendering the data from an Intel RealSense depth camera enabled this research to be possible. As researcher Paul Dourish and others make clear, the co-evolution of technologies with human life is inevitable. Therefore, it is crucial that we design technologies, systems, and experiences which help us to thrive together.

Subscribe here to get blog and news updates.

You may also be interested in

“Intel RealSense acts as the eyes of the system, feeding real-world data to the AI brain that powers the MR

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens