Introduction to Intel® RealSense™ Visual SLAM and the T265 Tracking Camera

GPS tracking has become an essential feature in every modern smartphone today. Why? Because understanding your own location in 3D space is extremely helpful. Gone are the days where you might need to pick up a map, look at street names and landmarks, orient yourself with a compass, and then keep track of your own location on the map while navigating to the desired destination. Now, it’s possible to get from any location on the planet to virtually any other, simply using the device we all carry in our pockets. GPS tracking also brought with it a slew of location-aware-services such as picture geo-tagging and the ability to ask your smart assistant to “take me to nearest Mexican restaurant,” for example.

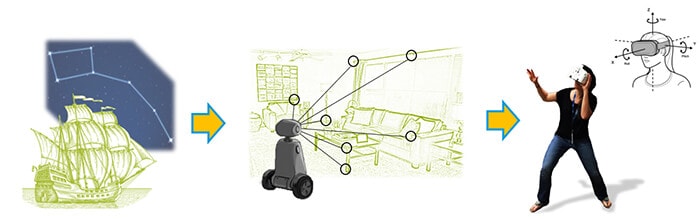

These features are incredible, but in this whitepaper, we conside what happens if we can go beyond today’s GPS performance limitations. What services would become available if we could increase the update rate from 1Hz to 200Hz, reduce the spatial resolution from 10m to millimeters, and work indoors as well as outdoors? This is exactly what modern Simultaneous Location and Mapping (SLAM) solutions try to do. With this level of performance, it is possible to track a device’s own 6-degrees-of-freedom, i.e location and orientation in 3D space, using “inside-out” tracking technologies that do not rely on any special fixed antennas, cameras, or markers in the scene.

Will SLAM become as important as GPS? It is certainly well on its way to becoming essential to all AR/VR experiences and absolutely critical to any robot or autonomous vehicle that needs to navigate around in a 3D world.

Location awareness has long been essential to our ability to navigate and explore our surrounding world. In this white paper we explore how embedded SLAM technologies can enable autonomous vehicles and robots and new AR/VR experiences.

To learn more about SLAM and how it is used, and to get an overview of the Intel RealSense Tracking Camera T265 you can read the full whitepaper here.

Subscribe here to get blog and news updates.

You may also be interested in

“Intel RealSense acts as the eyes of the system, feeding real-world data to the AI brain that powers the MR

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens