Projection, Texture-Mapping and Occlusion with Intel® RealSense™ Depth Cameras

When looking at a camera like an Intel RealSense Depth camera, it can be easy to forget that while the device is one single device, the cameras are made up of multiple different sensors. In the case of the stereo cameras, they include two near-infrared image sensors, an infrared emitter and an RGB sensor. In the case of the LiDAR camera L515, it has an emitter and sensor for the laser light, and an RGB sensor positioned above. When you take an image with any of the cameras, for each point the data you get consists of multiple different pieces of information from all these sensors combined together.

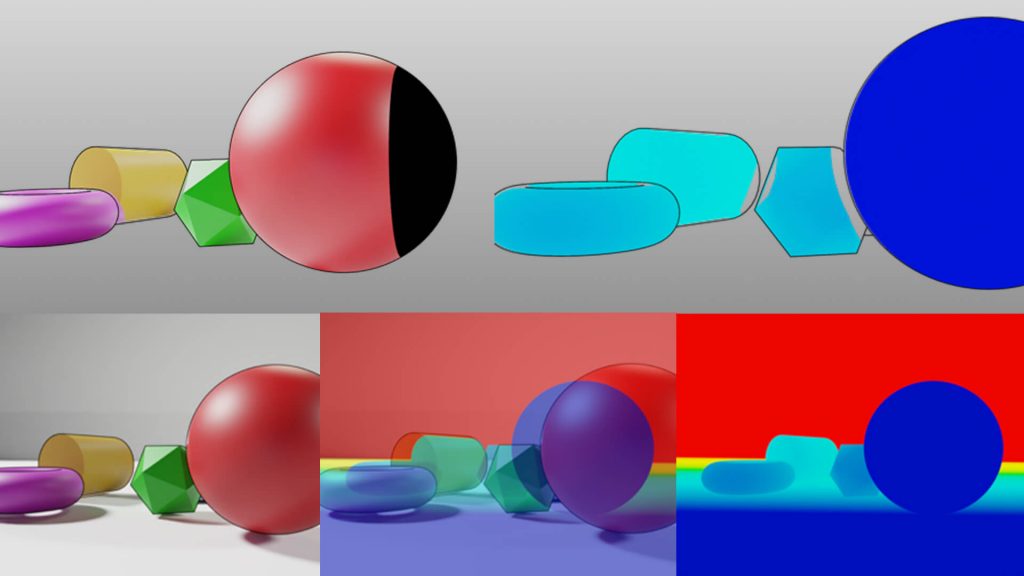

For one point in space, it will have an associated position (distance from the camera) and color information. The color data that comes from the RGB sensor is coming from a slightly different position than the depth information, so there are cases where overlapping or occluded objects can have color data that bleeds over or projects onto the wrong surface, as you can see in this synthetic example scene below.

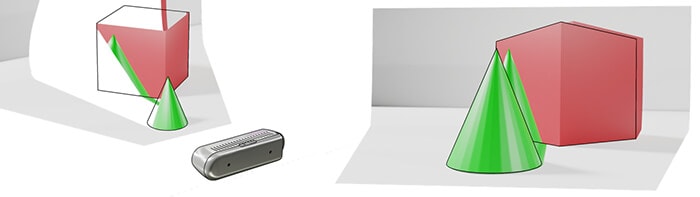

Example showing how the color data from the green cone can be transferred onto the red cube

In this whitepaper the underlying concepts behind projection, texture mapping and occlusion are explored, as well as strategies and methods to handle common problems in these areas.

Read the whitepaper here.

Subscribe here to get blog and news updates.

You may also be interested in

“Intel RealSense acts as the eyes of the system, feeding real-world data to the AI brain that powers the MR

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens