Types of Tracking Defined

Skeletal Tracking, gestures, object tracking and more.

When talking about computer vision, the word “tracking” comes up a lot. There are a lot of different kinds of tracking, depending on what you are tracking, how you are tracking it, and what you’re trying to achieve. In this post, we’ll talk about a few of those different types of tracking, how they work at a high level, and what you might want to use them for. This post will not cover tracking technologies that require sensors to be attached to an object – like RFID tags.

Skeletal Tracking

Skeletal tracking has been around for a while, and is something you’ve probably encountered before, possibly without realizing it. The Microsoft Kinect was one of the earliest consumer examples of skeletal tracking, using the data from human movement to interact with games.

Skeletal tracking systems usually use depth cameras for the most robust real time results, but it’s also possible to use 2D cameras with open source software to track skeletons at lower frame rates.

In simple terms, skeletal or skeleton tracking algorithms identify the presence of one or more humans, and where their head, body and limbs are positioned. Some systems can also track hands or specific gestures, though this is not true of all skeletal tracking systems. Most can identify a number of joints, for example, shoulders, elbows, wrists. The system then draws lines between all identified joints, as well as showing something to represent the head/neck.

Using depth cameras of any kind allows the skeletal tracking system to disambiguate between overlapping or occluded objects or limbs, as well as making the system more robust to different lighting conditions than a solely 2D camera-based algorithm would. There are a number of skeletal tracking solutions today that support Intel® RealSense™ depth cameras.

For a more in depth look at skeletal tracking, please check out this talk from Philip Krejov about body tracking in VR/AR with Intel® RealSense™ depth cameras.

Gesture tracking and Hand tracking

Frequently confused for each other, gesture tracking and hand tracking do have things in common – both allow a user to interact with some form of digital content using their hands. Gesture tracking, however, can usually be considered limited to specific hand shapes and gestures, for example a fist, OK hand gesture, or open palm gesture. The advantage of such a system is that usually the gestures can be recognized with high confidence, the disadvantage is that human users generally can remember no more than five gestures and what they stand for – it takes a lot longer to train a user for a more complex system with more gestures without the user getting confused.

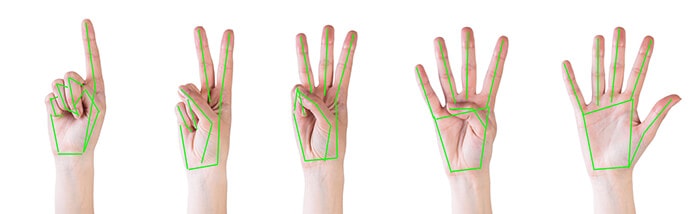

Hands with example finger tracking overlay.

A hand tracking system usually is more ambiguous than a gesture tracked system – similar to skeletal tracking, in many hand tracking systems, the joints and bones of the fingers are identified, again, usually using some kind of depth camera to help with occlusion and ambiguous situations such as one finger crossed over another. Hand tracking systems allow for more complicated interactions with digital content than gesture systems do, since individual fingers can interact with virtual content in a variety of ways, such as pushing or moving objects around, scaling them, pressing virtual buttons and more.

Object tracking

Object tracking has two separate features commonly associated with it, object detection and classification, followed by tracking where that object moves to. There are a variety of methods to detect objects, using some variation of machine learning or deep learning. As we have discussed in the past, machine learning involves training a system on thousands of images that have been classified and tagged and uses that data to identify unknown objects in new images. You can try out a system that identifies any image you upload or link to by visiting this caption bot.

For video or live camera feeds, once an object has been detected, it can be followed from frame to frame in a similar way, allowing a bounding box surrounding the object to have its motion tracked, for example, following a vehicle from frame to frame in a video.

People tracking

People tracking can be seen as a subset of either object tracking or skeletal tracking, depending on the end goal of a people tracking system – is the goal to track the number of shoppers in a store, or to be able to allow them to interact with digital signs as they move around in front of them? Depending on your use case, you would use either the gesture or skeletal tracking methodologies, or the object tracking methods to identify ‘person’ within a frame.

Street scene with object recognition bounding boxes around people and vehicles.

Eye tracking/Gaze tracking

Eye tracking and gaze tracking are methods which allow someone to interact with a digital system using only their eyes, or their eyes and combinations of other technology. An eye tracking system involves cameras (depth or other) pointed either at someone’s face or more closely at their eyes. By tracking how the eye (specifically the pupil) moves around, the direction of someone’s gaze can be measured. This is useful in general analytics; being able to track where someone looks within an application can give valuable user experience insights. It’s also useful in accessibility solutions since eye tracking can reduce or remove the need to use a mouse to interact with a screen, something which can be much more comfortable for users with carpal tunnel problems, for example. Eyeware is software that supports Intel® RealSense™ D400 series depth cameras for eye and gaze tracking.

SLAM tracking

Simultaneous Localization and Mapping, or SLAM is a different concept than all the other tracking mentioned thus far. The major difference is that SLAM devices track their own movement, relative to the world, rather than tracking the movement of objects within the field of view of the camera. SLAM devices such as the Intel® RealSense™ Tracking Camera T265 uses a combination of inertial sensors and visual input from two cameras to precisely track its own movement in space. This sort of technology is extremely useful in virtual and augmented reality headsets – both the Microsoft Hololens and the Oculus Rift S and Oculus Quest utilize their own versions of SLAM – sometimes also referred to as inside out tracking. It’s also very useful for robotics and drones, since knowing where something is and how it moves in a space allows it to accurately navigate the world.

Subscribe here to get blog and news updates.

You may also be interested in

“Intel RealSense acts as the eyes of the system, feeding real-world data to the AI brain that powers the MR

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens