Latest features.

We constantly add new features to our SDK to expand the capabilities of your project.

Open3D

Get started with open source 3D data processing.

The Intel RealSense SDK 2.0 is now integrated with Open3D, an open-source library designed for processing 3D data. Open3D supports rapid development of software for 3D data processing, including scene reconstruction, visualization, and 3D machine learning.

ROS

TensorFlow

OpenVINO™

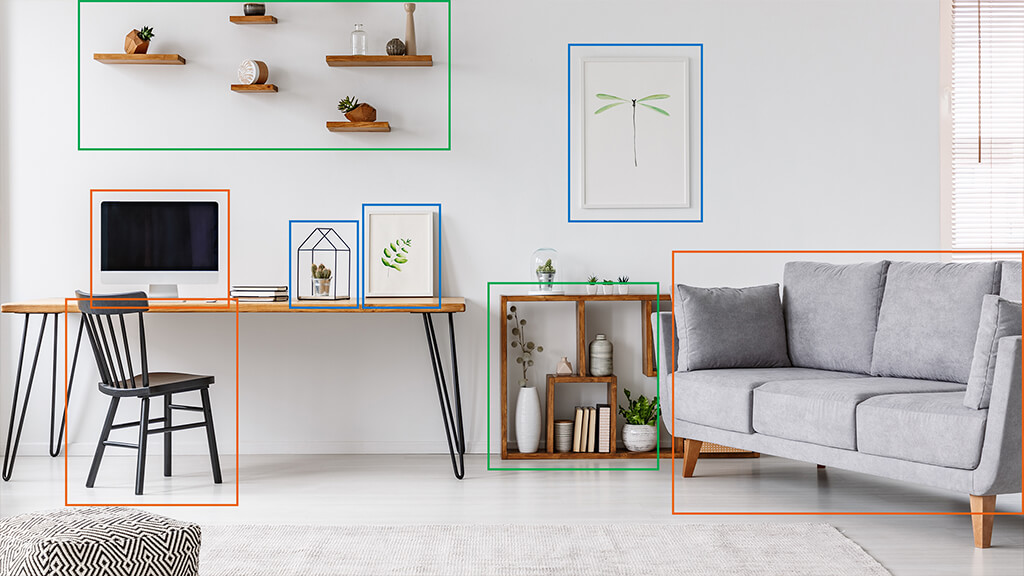

Intel® OpenVINO™ integration with Intel® RealSense™.

If you have been looking for an easy way to integrate object recognition or machine learning to your depth enabled project, we are happy to share that OpenVINO™ is now implemented into the Intel RealSense Viewer software, allowing you to detect faces and more with ease.

Build it your way.

Our SDK supports an extensive range of programming languages and development platforms and is compatible with a wide range of devices running any of the supported operating systems listed below.

Frameworks and wrappers

Code samples

Get your project off the ground quickly with help from our code examples and tutorials.

Hello

#include <librealsense2/rs.hpp> // Include RealSense Cross Platform API

// Create a Pipeline - this serves as a top-level API for streaming and processing frames

rs2::pipeline p;

// Configure and start the pipeline

p.start();

// Block program until frames arrive

rs2::frameset frames = p.wait_for_frames();

// Try to get a frame of a depth image

rs2::depth_frame depth = frames.get_depth_frame();

// Get the depth frame's dimensions

float width = depth.get_width();

float height = depth.get_height();

// Query the distance from the camera to the object in the center of the image

float dist_to_center = depth.get_distance(width / 2, height / 2);

// Print the distance

std::cout << "The camera is facing an object " << dist_to_center << " meters away \r";rs-hello-realsense

Basic demonstration of connecting to an Intel RealSense device and taking advantage of depth data by printing the distance to object in the center of camera field of view.

Simple depth capture

#include <librealsense2/rs.hpp> // Include Intel RealSense Cross Platform API

#include "example.hpp" // Include short list of convenience functions for rendering

// Capture Example demonstrates how to

// capture depth and color video streams and render them to the screen

int main(int argc, char * argv[]) try

{

rs2::log_to_console(RS2_LOG_SEVERITY_ERROR);

// Create a simple OpenGL window for rendering:

window app(1280, 720, "RealSense Capture Example");

// Declare depth colorizer for pretty visualization of depth data

rs2::colorizer color_map;

// Declare rates printer for showing streaming rates of the enabled streams.

rs2::rates_printer printer;

// Declare RealSense pipeline, encapsulating the actual device and sensors

rs2::pipeline pipe;

// Start streaming with default recommended configuration

// The default video configuration contains Depth and Color streams

// If a device is capable to stream IMU data, both Gyro and Accelerometer are enabled by default

pipe.start();

while (app) // Application still alive?

{

rs2::frameset data = pipe.wait_for_frames(). // Wait for next set of frames from the camera

apply_filter(printer). // Print each enabled stream frame rate

apply_filter(color_map); // Find and colorize the depth data

// The show method, when applied on frameset, break it to frames and upload each frame into a gl textures

// Each texture is displayed on different viewport according to it's stream unique id

app.show(data);

}

return EXIT_SUCCESS;

}

catch (const rs2::error & e)

{

std::cerr << "RealSense error calling " << e.get_failed_function() << "(" << e.get_failed_args() << "):\n " << e.what() << std::endl;

return EXIT_FAILURE;

}

catch (const std::exception& e)

{

std::cerr << e.what() << std::endl;

return EXIT_FAILURE;

}rs-capture

This sample demonstrates how to configure the camera for streaming and rendering Depth & RGB data to the screen.

Generating pointcloud

#include <librealsense2/rs.hpp> // Include RealSense Cross Platform API

#include "example.hpp" // Include short list of convenience functions for rendering

#include <algorithm> // std::min, std::max

// Helper functions

void register_glfw_callbacks(window& app, glfw_state& app_state);

int main(int argc, char * argv[]) try

{

// Create a simple OpenGL window for rendering:

window app(1280, 720, "RealSense Pointcloud Example");

// Construct an object to manage view state

glfw_state app_state;

// register callbacks to allow manipulation of the pointcloud

register_glfw_callbacks(app, app_state);

// Declare pointcloud object, for calculating pointclouds and texture mappings

rs2::pointcloud pc;

// We want the points object to be persistent so we can display the last cloud when a frame drops

rs2::points points;

// Declare RealSense pipeline, encapsulating the actual device and sensors

rs2::pipeline pipe;

// Start streaming with default recommended configuration

pipe.start();

while (app) // Application still alive?

{

// Wait for the next set of frames from the camera

auto frames = pipe.wait_for_frames();

auto color = frames.get_color_frame();

// For cameras that don't have RGB sensor, we'll map the pointcloud to infrared instead of color

if (!color)

color = frames.get_infrared_frame();

// Tell pointcloud object to map to this color frame

pc.map_to(color);

auto depth = frames.get_depth_frame();

// Generate the pointcloud and texture mappings

points = pc.calculate(depth);

// Upload the color frame to OpenGL

app_state.tex.upload(color);

// Draw the pointcloud

draw_pointcloud(app.width(), app.height(), app_state, points);

}

return EXIT_SUCCESS;

}

catch (const rs2::error & e)

{

std::cerr << "RealSense error calling " << e.get_failed_function() << "(" << e.get_failed_args() << "):\n " << e.what() << std::endl;

return EXIT_FAILURE;

}

catch (const std::exception & e)

{

std::cerr << e.what() << std::endl;

return EXIT_FAILURE;

}rs-pointcloud

This sample demonstrates how to generate and visualize textured 3D pointcloud.

The tools to build your own vision.

SDK tools help you harness your depth camera’s capabilities.

Intel® RealSense™ Viewer

Quickly access your Intel RealSense depth camera to view the depth stream, visualize point clouds, record and playback streams, configure your camera settings and more.

Depth Quality Tool

Test the camera depth quality with tools including: plane fit RMS error, subpixel accuracy, fill rate, distance accuracy compared to ground truth, on‑chip self‑calibration and tare calibration.

Calibration

Calibration insures the best operation of our stereo cameras. From simple and fast Intel RealSense Self-calibration to OEM calibration, we offer a variety of techniques to have your camera or module working at its optimal performance.

* The Apple logo is a trademark of Apple Inc., registered in the U.S. and other countries.