Using TensorFlow with Intel RealSense Depth Cameras

There are many situations where using a depth camera as input into a machine learning system can offer additional benefits and features – as we previously discussed in a prior blog post “What does Depth Bring to Machine Learning.” Depth cameras have a number of advantages for a variety of machine learning problems, including lighting invariance. Depth cameras can easily adapt to a wide variety of lighting conditions, something that 2D models must be trained to compensate for. A depth camera also allows differentiation between items of different size as well as easier background segmentation, allowing the separation of individual items regardless of how complicated the background is.

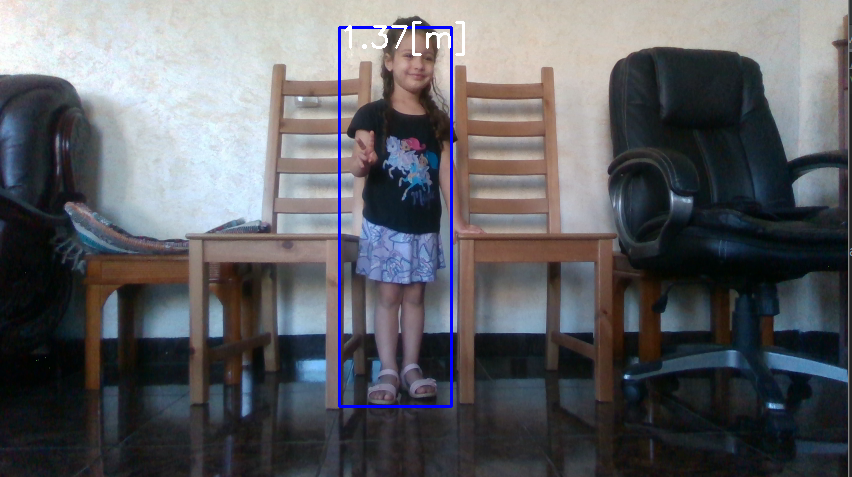

Image of a small child with a bounding box showing approximated height using TensorFlow and Intel RealSense Depth cameras

One of the main problems facing those wishing to use depth cameras for machine learning projects at the current time is the lack of significant training datasets, when compared with the existing libraries and models based on many thousands of 2d images. Given how complex machine learning is, it’s useful to have frameworks that assist with acquiring datasets, building models and implementing them.

TensorFlow from Google is one of the more commonly used end-to-end open source machine learning platforms. It combines a number of machine learning and deep learning models and algorithms and makes them useful by using a common metaphor. It uses Python for a convenient front-end API for building applications within the framework, and then executes those applications in high performance C++.

In this How-To guide, you will learn how to easily implement TensorFlow with Intel RealSense Depth cameras. It also features tools you can use to improve your understanding of your models and images. This guide utilizes Python, but the same concepts can be easily ported to other languages.

Subscribe here to get blog and news updates.

You may also be interested in

“Intel RealSense acts as the eyes of the system, feeding real-world data to the AI brain that powers the MR

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens